When we look out on the world, our brains are confronted by extremely high-dimensional and noisy perceptual inputs, from which we manage to extract stable and highly structured abstractions — objects, relations, and abstract patterns that capture the higher-order regularities in the world around us. These abstractions are, in turn, critical to the unique human capacity to reason about and rapidly adapt to new situations.

My work aims to understand how the human brain accomplishes this remarkable feat. This work draws on concepts and tools from several disciplines, including vision science, reasoning and decision making, cognitive neuroscience, and artificial intelligence. I am particularly interested in developing models that use tools from AI to extract rich representations from real-world data, in concert with normative cognitive models that can provide principled explanations of phenomena at both the behavioral and neural levels. I'm also interested in the development of AI systems that take inspiration from cognitive models to achieve more human-like reasoning and generalization.

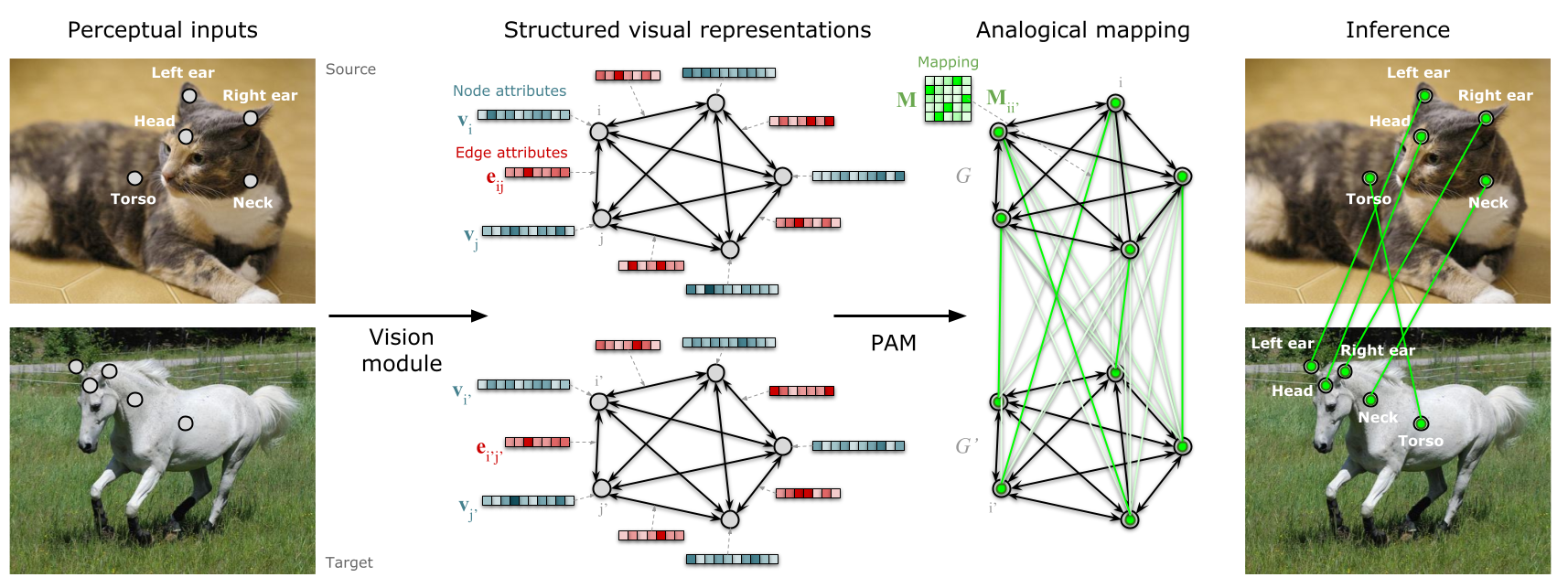

Analogy is at the heart of our ability to reason about new and unfamiliar situations. When confronted with a new problem, human reasoners can often identify a reasonable strategy by making an analogy to a more familiar setting. Cognitive science has identified many of the basic processes that underlie this capacity — mapping, inference, schema induction — but models of these processes have generally been limited to highly constrained, idealized representations, leaving open the question of how human analogical reasoning operates in the context of complex, naturalistic inputs. In a recent line of work, my collaborators and I have been developing a new computational framework for understanding analogy that brings together learned representations extracted from real-world data (e.g., images or natural language) with structured reasoning operations derived from cognitive theories. This approach is illustrated in our recent paper on visual Probabilistic Analogical Mapping (visiPAM). In related work, I have also investigated the emergent analogical reasoning abilities of large language models.

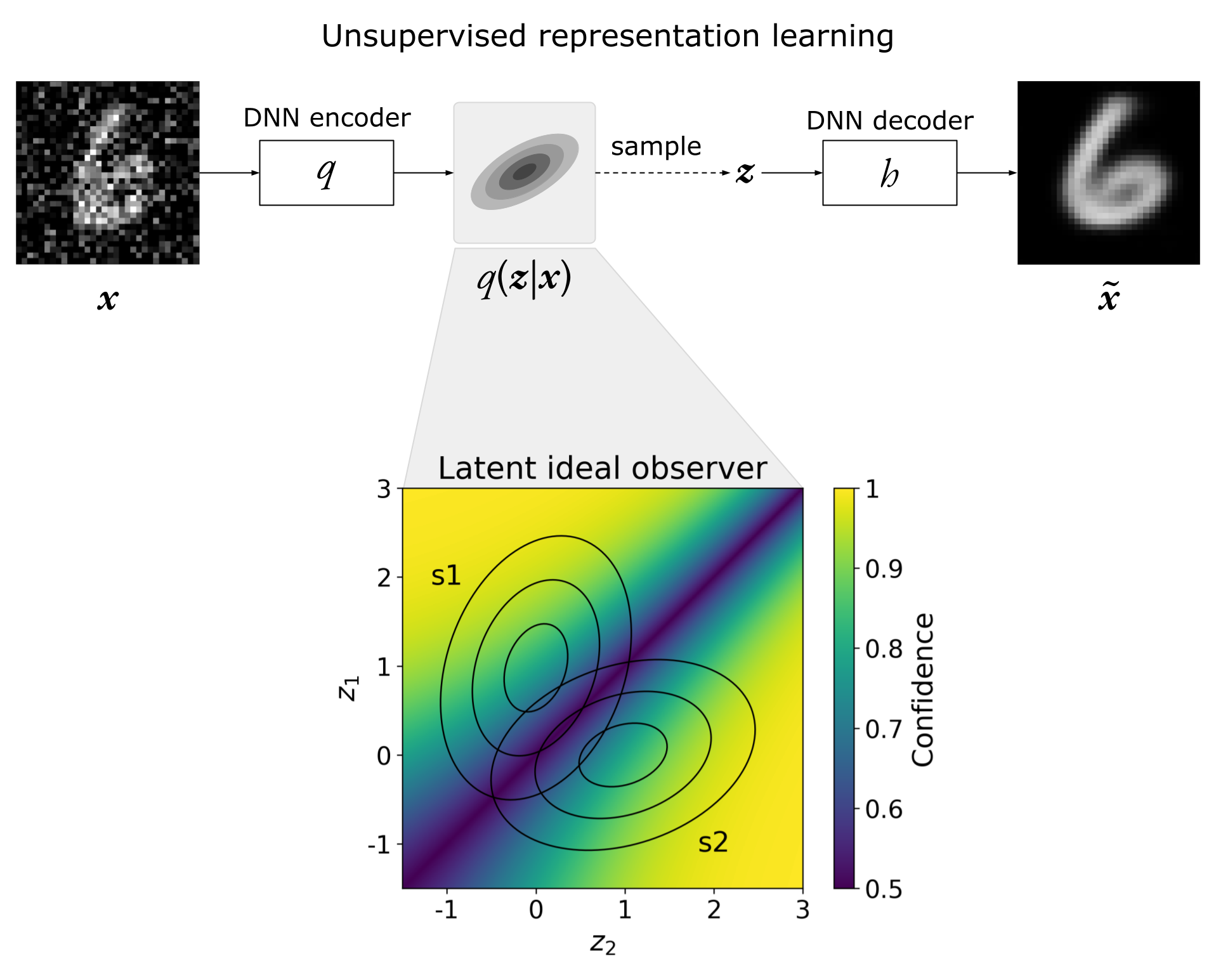

When faced with a decision, we have the ability not only to choose from a set of possible options, but also to assess how confident we are in our choice. This capacity for metacognition — the ability to evaluate our own thoughts and decisions — is a critical part of our decision-making and reasoning processes. What are the computational mechanisms that underlie this capacity, and how might they be grounded in the complex, real-world inputs over which the brain must operate? Together with my collaborators, I recently developed a model of decision confidence that uses deep neural networks to operate over naturalistic images. This model provides a normative account for a range of puzzling, and apparently suboptimal, features of decision confidence, and also makes a number of behavioral and neural predictions that we are currently in the process of testing. You can read more about this work in our recent paper.

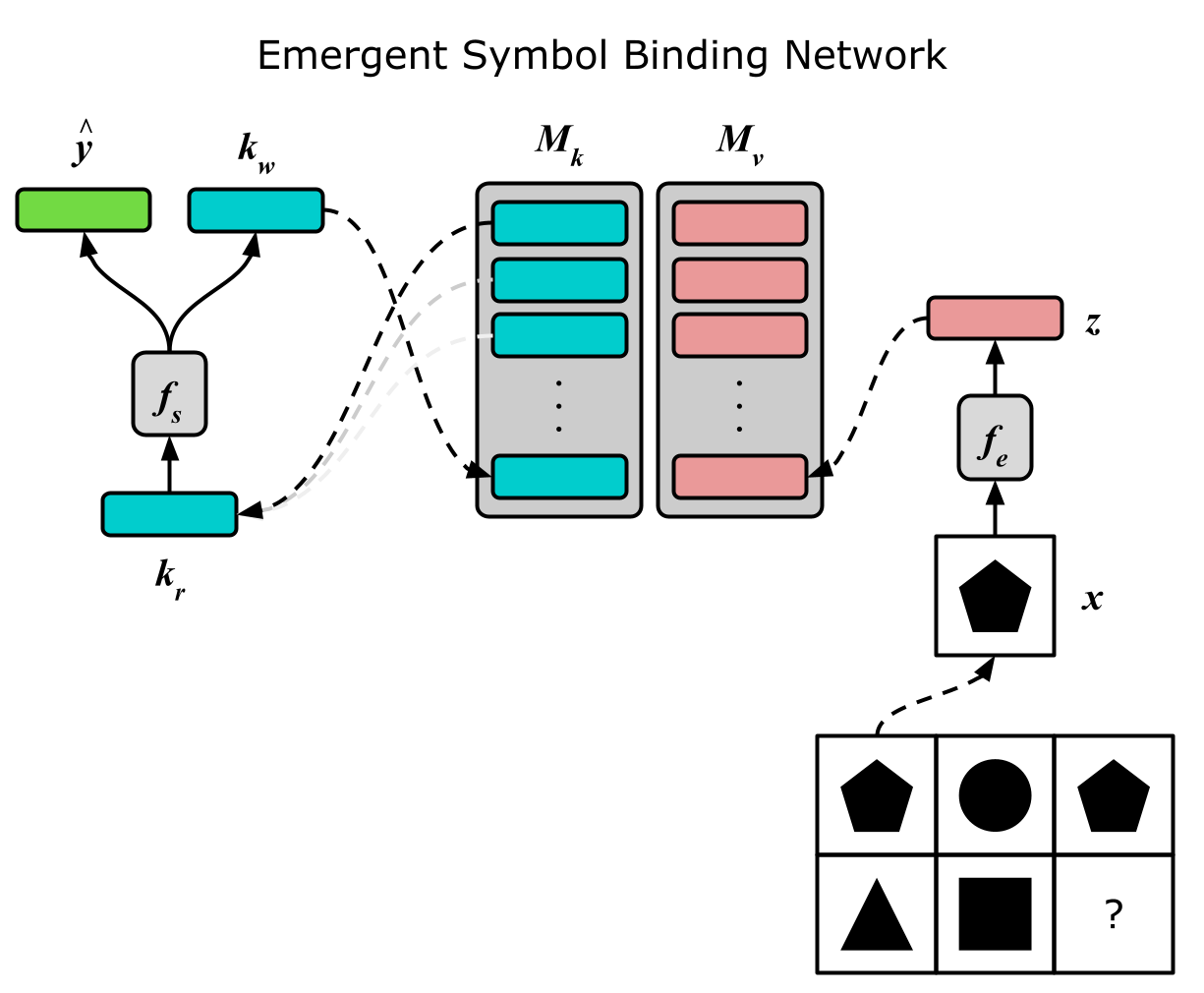

Deep neural networks have made remarkable breakthroughs in recent years, particularly when it comes to the ability to deal with high-dimensional, real-world perceptual inputs such as images. This approach, however, is often too strongly tied to the statistics of the data on which a model is trained, failing to capture the capacity for generalization that characterizes human cognition. In the field of machine learning, this is often referred to as the problem of out-of-distribution (OOD) generalization — the ability of a learning algorithm to generalize beyond the distribution of its training data. In human cognition, this ability is closely tied to a capacity for reasoning about relations. A major focus of my research is the development of novel neural network methods that incorporate this human-like bias toward relational processing, which have the potential both to contribute to advances in AI, and to help us understand how the human brain achieves such generalization and abstraction. Examples of this approach include my work on context normalization and emergent symbol binding. I have also explored the role that object-centric processing plays in visual reasoning, and the potential synergy between object-centric and relational inductive biases.

What is subjective experience, and how could it possibly result from the computational mechanisms in the brain? Together with Michael Graziano, I helped to develop and test the Attention Schema Theory (AST), a theoretical account of the computational and neural basis of subjective experience. According to this view, our reports concerning subjective experience are based on a metacognitive model of our own mental processes. In particular, AST proposes that subjective reports arise from an implicit model of our own attentional processes, computed in part to facilitate the effective control of attention. We tested and confirmed some of the predictions that follow from this view in a series of psychophysical experiments, and identified some of the cortical networks involved in subjective visual awareness. AST also suggests an interesting link between the neural mechanisms supporting both subjective experience and social cognition.